Introduction

This is a summary of a few topics that should to be kept in mind when a Multi-Gigabit Tranceiver (MGT) is employed in an FPGA design. It’s not a substitute for reading the relevant user guide, nor a tutorial. Rather, it’s here to point at issues that may not be obvious at first glance.

The terminology and signal names are those used with Xilinx FPGA. The tranceiver is referred to as GTX (Gigabit Transceiver), but other variants of transceivers, e.g. GTH and GTZ, are to a large extent the same components with different bandwidth capabilities.

Overview

GTXs, which are the basic building block for common interface protocols (e.g. PCIe and SATA) are becoming an increasingly popular solution for communication between FPGAs. As the GTX’ instance consists of a clock and parallel data interface, it’s easy to mistake it for a simple channel that moves the data to the other end in a failsafe manner. A more realistic view of the GTX’ is a front end for a modem, with the possible bit errors and a need to synchronize serial-to-parallel data alignment at the receiver. Designing with the GTX also requires attention to classic communication related topics, e.g. the use of data encoding, equalizers and scramblers.

As a result, there are a few application-dependent pieces of logic that needs to be developed to support the channel:

- The possibility of bit errors on the channel must be handled

- The alignment from a bit stream to a parallel word must be taken care of (which bit is the LSB of the parallel word in the serial stream?)

- If the transmitter and receiver aren’t based on a common clock, a protocol that injects and tolerates idle periods on the data stream must be used, or the clock difference will cause data underflows or overflows. Sending the data in packets in a common solution. In the pauses between these packets, special skip symbols must be inserted into the data stream, so that the GTX’ receiver’s clock correction mechanism can remove or add such symbols into the stream presented to the application logic, which runs at a clock slightly different from the received data stream.

- Odds are that a scrambler needs to be applied on the channel. This requires logic that creates the scrambling sequence as well as synchronizes the receiver. The reason is that an equalizer assumes that the bit stream is uncorrelated on the average. Any average correlation between bit positions is considered ISI and is “fixed”. See Wikipedia

Having said the above, it’s not uncommon that no bit errors are ever observed on a GTX channel, even at very high rates, and possibly with no equalization enabled. This can’t be relied on however, as there is in fact no express guarantee for the actual error probablity of the channel.

Clocking

The clocking of the GTXs is an issue in itself. Unlike the logic fabric, each GTX has a limited number of possible sources for its reference clock. It’s mandatory to ensure that the reference clock(s) are present in one of the allowed dedicated inputs. Each clock pin can function as the reference clock of up to 12 particular GTXs.

It’s also important to pay attention to the generation of the serial data clocks for each GTX from the reference clock(s). It’s not only a matter of what multiplication ratios are allowed, but also how to allocate PLL resources and their access to the required reference clocks.

QPLL vs. CPLL

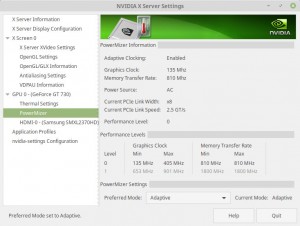

Two types of PLLs are availble for producing the serial data clock, typically running at severtal GHz: QPLLs and CPLLS.

The GTXs are organized in groups of four (“quads”). Each quad shares a single QPLL (Quad PLL), which is instantiated separately (as a GTXE2_COMMON). In addition, each GTX has a dedicated CPLL (Channel PLL), which can generate the serial clock for that GTX only.

Each GTX may select its clock source from either the (common) QPLL or its dedicated CPLL. The main difference between these is that the QPLL covers higher frequencies. High-rate applications are hence forced to use the QPLL. The downside is that all GTXs sharing the same QPLL must have the same data rate (except for that each GTX may divide the QPLL’s clock by a different rate). The CPLL allow for a greater flexibility of the clock rates, as each GTX can pick its clock independently, but with a limited frequency range.

Jitter

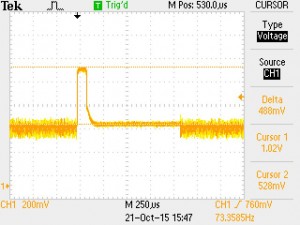

Jitter on the reference clock(s) is the silent killer of GTX links. It’s often neglected by designers because “it works anyhow”, but jitter on the reference clock has a disastrous effect on the channel’s quality, which can be by far worse than a poor PCB layout. As both jitter and poor PCB layout (and/or cabling) contribute to the bit error rate and the channel’s instability, the PCB design is often blamed when things go bad. And indeed, playing with the termination resistors or similar black-magic actions sometimes “fix it”. This makes people believe that GTX links are extremely sensitive to every via or curve in the PCB trace, which is not the case at all. It is, on the other hand, very sensitive to the reference clock’s jitter. And with some luck, a poorly chosen reference clock can be compensated for with a very clean PCB trace.

Jitter is commonly modeled as a noise component which is added to the timing of the clock transition, i.e. t=kT+n (n is the noise). Consequently, it is often defined in terms of the RMS of this noise component, or a maximal value which is crossed at a sufficiently low probability. The treatment of an GTX’ reference clock requires a slightly different approach; the RMS figures are not necessarily a relevant measures. In particular, clock sources with excellent RMS jitter may turn out inadequate, while other sources, with less impressive RMS figures may work better.

Since the QPLL or CPLL locks on this reference clock, jitter on the reference clock results in jitter in the serial data clock. The prevailing effect is on the transmitter, which relies on this serial data clock; the receiver is mainly based on the clock it recovers from the incoming data stream, and is therefore less sensitive to jitter.

Some of the jitter – in particular “slow” jitter (based upon low frequency components) is fairly harmless, as the other side’s receiver clock synchronization loop will cancel its effect by tracking the random shifts of the clock. On the other hand, very fast jitter in the reference clock may not be picked up by the QPLL/CPLL, and is hence harmless as well.

All in all, there’s a certain band of frequency components in the clock’s timing noise spectrum, which remains relevant: The band that causes jitter components which are slow enough for the QPLL/CPLL to track and hence present on the serial data clock, and too fast for the receiver’s tracking loop to follow. The measurable expression for this selective jitter requirement is given in terms of phase noise frequency masks, or sometimes as the RMS jitter in bandwidth segments (e.g. PCIe Base spec 2.1, section 4.3.7, or Xilinx’ AR 44549). Such spectrum masks required for GTX published by the hardware vendors. The spectral behavior of clock sources is often more difficult to predict: Even when noise spectra are published in datasheets, they are commonly given only for certain scenarios as typical figures.

8b/10b encoding

Several standardized uses of MGT channels (SATA, PCIe, DisplayPort etc.) involve a specific encoding scheme between payload bytes for transmission and the actual bit sequence on the channel. Each (8-bit) byte is mapped to an 10-bit word, based upon a rather peculiar encoding table. The purposes of this encoding is to ensure a balance between the number of 0′s and 1′s on the physical channel, allowing AC-coupling of the electrical signal. Also, this encoding also ensures frequent toggling between 0′s and 1′s, which ensures the proper bit synchronization at the receiver by virtue of the of the clock recovery loop (“CDR”). Other things that are worth noting about this encoding:

- As there are 1024 possible code words covering 256 possible input bytes, some of the excessive code words are allocated as control characters. In particular, a control character designated K.28.5 is often referred to as “comma”, and is used for synchronization.

- The 8b/10b encoding is not an error correction code despite its redundancy, but it does detect some errors, if the received code word is not decodable. On the other hand, a single bit error may lead to a completely different decoded word, without any indication that an error occurred.

Scrambling

To put it short and concise: If an equalizer is applied, the user-supplied data stream must be random. If the data payload can’t be ensured to be random itself (this is almost always the case), a scrambler must be defined in the communication protocol, and applied in the logic design.

Applying a scrambler on the channel is a tedious task, as it requires a synchronization mechanism between the transmitter and receiver. It’s often quite tempting to skip it, as the channel will work quite well even in the absence of a scrambler, even where it’s needed. However in the long run, occasional channel errors are typically experienced.

The rest of this paragraph attempts to explain the connection between the equalizer and scrambler. It’s not the easiest piece of reading, so it’s fine to skip it, if my word on this is enough for you.

In order to understand why scrambling is probably required, it’s first necessary to understand what an equalizer does.

The problem equalizers solve is the filtering effect of the electrical media (the “channel”) through which the bit stream travels. Both cables and PCBs reduce the strength of the signal, but even worse: The attenuation depends on the frequency, and reflections occur along the metal trace. As a result, the signal doesn’t just get smaller in magnitude, but it’s also smeared over time. A perfect, sharp, step-like transition from -1200 mV to +1200mV at the transmitter’s pins may end up as a slow and round rise from -100mV to +100mV. Because of this slow motion of the transitions at the receiver, the clear boundaries between the bits are broken. Each transmitted bit keeps leaving its traces way after its time period. This is called Inter-Symbol Interference (ISI): The received voltage at the sampling time for the bit at t=0 depends on the bits at t=-T, t=t-2T and so on. Each bit effectively produces noise for the bits coming after it.

This is where the equalizer comes in. The input of this machine is the time samples of the bit at t=0, but also a number of measured voltage samples of the bits before and after it. By making a weighted sum of these inputs, the equalizer manages, to a large extent, to cancel the Inter-Symbol Interference. In a way, it implements a reverse filter of the channel.

So how does the equalizer acquire the coefficients for each of the samples? There are different techniques for training an equalizer to work effectively against the channel’s filtering. For example, cellular phones do their training based upon a sequence of bits on each burst, which is known in advance. But when the data stream runs continuously, and the channel may change slightly over time (e.g. a cable is being bent) the training has to be continuous as well. The chosen method for the equalizers in GTXs is therefore continuous.

The Decision Feedback Equalizer, for example, starts with making a decision on whether each input bit is a ’0′ or ’1′. It then calculates the noise signal for this bit, by subtracting the measured voltage with the expected voltage for a ’0′ or ’1′, whichever was decided upon. The algorithm then slightly alters the weighted sums in a way that removes any statistical correlation between the noise and the previous samples. This works well when the bit sequence is completely random: There is no expected correlation between any input sample, and if such exists, it’s rightfully removed. Also, the adaptation converges into a compromise that works on the average best for all bit sequences.

But what happens if there is a certain statistical correlation between the bits in the data itself? The equalizer will specialize in reducing the ISI for the bit patterns occurring more often, possibly doing very bad on the less occurring patterns. The equalizer’s role is to compensate for the channel’s filtering effect, but instead, it adds an element of filtering of its own, based upon the common bit patterns. In particular, note that if a constant pattern runs through the channel when there’s no data for transmission (zeros, idle packets etc.) the equalizer will specialize in getting that no-data through, and mess up with the actual data.

One could be led to think that the 8b/10b encoding plays a role in this context, but it doesn’t. Even though cancels out DC on the channel, it does nothing about the correlation between the bits. For example, if the payload for transmission consists of zeros only, the encoded words on the channel will be either 1001110100 or 0110001011. The DC on the channel will remain zero, but the statistical correlation between the bits is far from being zero.

So unless the data is inherently random (e.g. an encrypted stream), using an equalizer means that the data which is supplied by the application to the transmitter must be randomized.

The common solution is a scrambler: XORing the payload data by a pseudo-random sequence of bits, generated by a simple state machine. The receiver must XOR the incoming data with the same sequence in order to retrieve the payload data. The comma (K28.5) symbol is often used to synchronize both state machines.

In GTX applications, the (by far) most commonly used scrambler is the G(X)=X^16+X^5+X^4+X^3+1 LFSR, which is defined in a friendly manner in the PCIe standard (e.g. the PCI Express Base Specification, rev. 1.1, section 4.2.3 and in particular Appendix C).

TX/RXUSRCLK and TX/RXUSRCLK2

Almost all signals between the FPGA logic fabric and the GTX are clocked with TXUSRCLK2 (for transmission) and RXUSRCLK2 (for reception). These signals are supplied by the user application logic, without any special restriction, except that the frequency must match the GTX’ data rate so as to avoid overflows or underflows. A common solution for generating this clock is therefore to drive the GTX’ RX/TXOUTCLK through a BUFG.

The logic fabric is required to supply a second clock in each direction, TXUSRCLK and RXUSRCLK (without the “2” suffix). These two clocks are the parallel data clocks in a deeper position of the GTX.

The rationale is that sometimes, it’s desired to let the logic fabric work with a word width which is twice as wide as the actual word width. For example, in a high-end data rate application, the GTX’ word width may be set to 40 bits with 8b/10b, so the logic fabric would interface with the GTX through a 32 bit data vector. But because of the high rate, the clock frequency may still be too high for the logic fabric, in which case the GTX allows halving the clock, and applying the data through a 80 bits word. In this case, the logic fabric supplies the 80-bit word clocked with TXUSRCLK2, and is also required to supply a second clock, TXUSRCLK having twice the frequency, and being phase aligned with TXUSRCLK2. TXUSRCLK is for the GTX’ internal use.

A similar arrangement applies for reception.

Unless the required data clock rate is too high for the logic fabric (which is usually not the case), this dual-clock arrangement is best avoided, as it requires an MMCM or PLL to generate two phase aligned clocks. Except for the lower clock applied to the logic fabric, there is no other reason for this.

Word alignment

On the transmitting side, the GTX receives a vector of bits, which forms a word for transmission. The width of this word is one of the parameters that are set when the GTX is instantiated, and so is whether 8b/10b encoding is applied. Either way, some format of parallel words is transmitted over the channel in a serialized manner, bit after bit. Unless explicitly required, there is nothing in this serial bitstream to indicate the words’ boundaries. Hence the receiver has no way, a-priori, to recover the word alignment.

The receiver’s GTX’ output consists of a parallel vector of bits, typically with the same width as the transmitter. Unless a mechanism is employed by the user logic, the GTX has no way to recover the correct alignment. Without such alignment, the organization into a parallel words arrives wrong at the receiver, and possibly as complete garbage, as an incorrect alignment prevents 8b/10b decoding (if employed).

It’s up to the application logic to implement a mechanism for synchronizing the receiver’s word alignment. There are two methodologies for this: Moving the alignment one bit at a time at the receiver’s side (“bit slipping”) until the data arrives properly, or transmitting a predefined pattern (a “comma”) periodically, and synchronize the receiver when this pattern is detected.

Bit slipping is the less recommended practice, even though simpler to understand. It keeps most of the responsibility in the application logic’s domain: The application logic monitors the arriving data, and issues a bit slip request when it has gathered enough errors to conclude that the alignment is out of sync.

However most well-established GTX-based protocols use commas for alignment. This method is easier in the way that the GTX aligns the word automatically when a comma is detected (if the GTX is configured to do so). If injecting comma characters periodically into the data stream fits well in the protocol, this is probably the preferred solution. The comma character can also be used to synchronize other mechanisms, in particular the scrambler (if employed).

Comma detection may also have false positives, resulting from errors in the raw data channel. As these data channels usually have a very low bit error probability (BER), this possibility can be overlooked in applications where a short-term false alignment resulting from a false comma detected is acceptable. When this is not acceptable, the application logic should monitor the incoming data, and disable the GTX automatic comma alignment through the rxpcommaalignen and/or rxmcommaalignen inputs of the GTX.

Tx buffer, to use or not to use

The Tx buffer is a small dual-clock (“asynchronous”) FIFO in the transmitter’s data path + some logic that makes sure that it starts off in the state of being half full.

The underlying problem, which the Tx buffer potentially solves, is that the serializer inside the GTX runs on a certain clock (XCLK) while the application logic is exposed to another clock (TXUSRCLK). The frequency of these clocks must be exactly the same to prevent overflow or underflow inside the GTX. This is fairly simple to achieve. Ensuring proper timing relationships between these two clocks is however less trivial.

There are hence two possibilies:

- Not requiring a timing relationship between these clock (just the same frequency). Instead, use a dual-clock FIFO, which interfaces between these two clock domains. This small FIFO is referred to as the “Tx buffer”. Since it’s part of the GTX’ internal logic, going this path doesn’t require any additional resources from the logic fabric.

- Make sure that the clocks are aligned, by virtue of a state machine. This state machine is implemented in the logic fabric.

The first solution is simpler and requires less resources from the FPGA’s logic fabric. Its main drawback is the latency of the Tx buffer, which is typically around 30 TXUSRCLK cycles. While this delay is usually negligible from a functional point of view, it’s not possible to predict its exact magnitude. It’s therefore not possible to use the Tx buffer on several parallel lanes of data, if the protocol requires a known alignment between the data in these lanes, or when an extremely low latency is required.

The second solutions requires some extra logic, but there is no significant design effort: This logic that aligns the clocks is included automatically by the IP core generator on Vivado 2014.1 and later, when “Tx/Rx buffer off” mode is chosen.

Xilinx GTX’ documentation is somewhat misleading in that it details the requirements of the state machine to painful detail: There’s no need to read through that long saga in the user guide. As a matter of fact, this logic is included automatically by the IP core generator on Vivado 2014.1, so there’s really no reason to dive into this issue. Only note that gtN_tx_fsm_reset_done_out may take a bit longer to assert after a reset (something like 1 ms on a 10 Gb/s lane).

Rx buffer

The Rx buffer (also called “Rx elastic buffer”) is also a dual-clock FIFO, which is placed in the same clock domain gap as the Tx buffer, and has the same function. Bypassing it requires the same kind of alignment mechanism in the logic fabric.

As with its Tx counterpart, bypassing the Rx buffer makes the latency short and deterministic. It’s however less common that such a bypass is practically justified: While a deterministic Tx latency may be required to ensure data alignment between parallel lanes in order to meet certain standard protocol requirements, there is almost always fairly easy methods to compesate for the unknown latency in user logic. Either way, it’s preferred not to rely on the transmitter to meet requirements on data alignment, and align the data, if required, by virtue of user logic.

Leftover notes

- sysclk_in must be stable when the FPGA wakes up from configuration. A state machine that brings up the transceivers is based upon this clock. It’s referred to as the DRP clock in the wizard (find more imformation at http://www.directics.com/).

- It’s important to declare the DRP clock’s frequency correctly, as certain required delays which are measured in nanoseconds are implemented by dwelling for a number of clocks, which is calculated from this frequency.

- In order to transmit a comma, set the txcharisk to 1 (since it’s a vector, it sets the LSB) and the value of the 8 LSBs of the data to 0xBC, which is the code for K.28.5.