This is just a raw dump of PCIe communication. I wrote a small sniffer on an FPGA and ran some data in a loop to and from the peripheral. The sniffer’s own data was stored while sniffing, so it doesn’t appear in the stream. The whole thing ran on a Linux machine.

I thought that after writing a few words about TLP formation, a real-life example could be in place.

I recorded headers only, and then hacked together a Perl script (too ugly and specific for any future use) and got the dump below.

All writes from host to peripheral (marked with “>>”) are register writes (to the kernel code the BAR is at 0xfacf2000, but see lspci output below).

Writes from peripheral to host (marked with “<<”) consist of DMA transmissions containing data (longer writes) and status updates (shorter).

And then we have DMA reads made by peripheral, with read requests (“<<”) and completions (“>>”).

Each TLP is given in cleartext, and then the packet’s 3-4 header words hexadecimal in parentheses. In the cleartext part the address and (sender’s) bus ID are given in hex, all other in plain decimal.

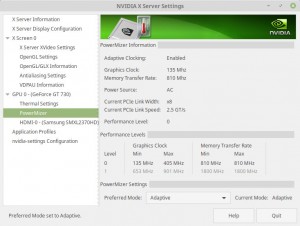

As it turned out, the host sends packets using 32-bit addressing, and the peripheral uses 64 bits (as it was told to).

So before getting to the raw dumps, let’s just see what lspci -vv gave us on the specific device:

01:00.0 Class ff00: Xilinx Corporation Generic FPGA core

Subsystem: Xilinx Corporation Generic FPGA core

Control: I/O+ Mem+ BusMaster+ SpecCycle- MemWINV- VGASnoop- ParErr- Step

ping- SERR- FastB2B-

Status: Cap+ 66MHz- UDF- FastB2B- ParErr- DEVSEL=fast >TAbort- <TAbort- <MAbort- >SERR- <PERR-

Latency: 0, Cache Line Size: 4 bytes

Interrupt: pin ? routed to IRQ 42

Region 0: Memory at fdaff000 (64-bit, non-prefetchable) [size=128]

Capabilities: [40] Power Management version 3

Flags: PMEClk- DSI- D1- D2- AuxCurrent=0mA PME(D0-,D1+,D2+,D3hot+,D3cold-)

Status: D0 PME-Enable- DSel=0 DScale=0 PME-

Capabilities: [48] Message Signalled Interrupts: 64bit+ Queue=0/0 Enable+

Address: 00000000fee0300c Data: 4152

Capabilities: [58] Express Endpoint IRQ 0

Device: Supported: MaxPayload 512 bytes, PhantFunc 0, ExtTag-

Device: Latency L0s unlimited, L1 unlimited

Device: AtnBtn- AtnInd- PwrInd-

Device: Errors: Correctable- Non-Fatal- Fatal- Unsupported-

Device: RlxdOrd+ ExtTag- PhantFunc- AuxPwr- NoSnoop+

Device: MaxPayload 128 bytes, MaxReadReq 512 bytes

Link: Supported Speed 2.5Gb/s, Width x1, ASPM L0s, Port 0

Link: Latency L0s unlimited, L1 unlimited

Link: ASPM Disabled RCB 64 bytes CommClk- ExtSynch-

Link: Speed 2.5Gb/s, Width x1

Capabilities: [100] Device Serial Number 00-00-00-00-00-00-00-0

And now to the dump itself (unfortunately, I didn’t grab any MSI):

>> (Write) Type = 0, fmt=2, length=1

>> Bus ID: 0000, Tag=00

>> Address = fdaff008

>> (40000001, 0000000f, fdaff008)

>> (Write) Type = 0, fmt=2, length=1

>> Bus ID: 0000, Tag=00

>> Address = fdaff030

>> (40000001, 0000000f, fdaff030)

>> (Write) Type = 0, fmt=2, length=1

>> Bus ID: 0000, Tag=00

>> Address = fdaff030

>> (40000001, 0000000f, fdaff030)

>> (Write) Type = 0, fmt=2, length=1

>> Bus ID: 0000, Tag=00

>> Address = fdaff030

>> (40000001, 0000000f, fdaff030)

>> (Write) Type = 0, fmt=2, length=1

>> Bus ID: 0000, Tag=00

>> Address = fdaff034

>> (40000001, 0000000f, fdaff034)

>> (Write) Type = 0, fmt=2, length=1

>> Bus ID: 0000, Tag=00

>> Address = fdaff030

>> (40000001, 0000000f, fdaff030)

<< (Write) Type = 0, fmt=3, length=32

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c17000

<< (60000020, 010000ff, 00000000, 00c17000)

<< (Write) Type = 0, fmt=3, length=32

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c17080

<< (60000020, 010000ff, 00000000, 00c17080)

<< (Write) Type = 0, fmt=3, length=11

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c17100

<< (6000000b, 010000ff, 00000000, 00c17100)

<< (Write) Type = 0, fmt=3, length=4

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c29200

<< (60000004, 010000ff, 00000000, 00c29200)

>> (Write) Type = 0, fmt=2, length=1

>> Bus ID: 0000, Tag=00

>> Address = fdaff008

>> (40000001, 0000000f, fdaff008)

>> (Write) Type = 0, fmt=2, length=1

>> Bus ID: 0000, Tag=00

>> Address = fdaff030

>> (40000001, 0000000f, fdaff030)

>> (Write) Type = 0, fmt=2, length=1

>> Bus ID: 0000, Tag=00

>> Address = fdaff030

>> (40000001, 0000000f, fdaff030)

>> (Write) Type = 0, fmt=2, length=1

>> Bus ID: 0000, Tag=00

>> Address = fdaff030

>> (40000001, 0000000f, fdaff030)

>> (Write) Type = 0, fmt=2, length=1

>> Bus ID: 0000, Tag=00

>> Address = fdaff034

>> (40000001, 0000000f, fdaff034)

>> (Write) Type = 0, fmt=2, length=1

>> Bus ID: 0000, Tag=00

>> Address = fdaff030

>> (40000001, 0000000f, fdaff030)

<< (Write) Type = 0, fmt=3, length=32

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c15000

<< (60000020, 010000ff, 00000000, 00c15000)

<< (Write) Type = 0, fmt=3, length=32

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c15080

<< (60000020, 010000ff, 00000000, 00c15080)

<< (Write) Type = 0, fmt=3, length=32

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c15100

<< (60000020, 010000ff, 00000000, 00c15100)

<< (Write) Type = 0, fmt=3, length=32

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c15180

<< (60000020, 010000ff, 00000000, 00c15180)

<< (Write) Type = 0, fmt=3, length=12

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c15200

<< (6000000c, 010000ff, 00000000, 00c15200)

<< (Write) Type = 0, fmt=3, length=4

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c29200

<< (60000004, 010000ff, 00000000, 00c29200)

>> (Write) Type = 0, fmt=2, length=1

>> Bus ID: 0000, Tag=00

>> Address = fdaff008

>> (40000001, 0000000f, fdaff008)

>> (Write) Type = 0, fmt=2, length=1

>> Bus ID: 0000, Tag=00

>> Address = fdaff030

>> (40000001, 0000000f, fdaff030)

>> (Write) Type = 0, fmt=2, length=1

>> Bus ID: 0000, Tag=00

>> Address = fdaff030

>> (40000001, 0000000f, fdaff030)

>> (Write) Type = 0, fmt=2, length=1

>> Bus ID: 0000, Tag=00

>> Address = fdaff030

>> (40000001, 0000000f, fdaff030)

>> (Write) Type = 0, fmt=2, length=1

>> Bus ID: 0000, Tag=00

>> Address = fdaff030

>> (40000001, 0000000f, fdaff030)

>> (Write) Type = 0, fmt=2, length=1

>> Bus ID: 0000, Tag=00

>> Address = fdaff030

>> (40000001, 0000000f, fdaff030)

>> (Write) Type = 0, fmt=2, length=1

>> Bus ID: 0000, Tag=00

>> Address = fdaff034

>> (40000001, 0000000f, fdaff034)

>> (Write) Type = 0, fmt=2, length=1

>> Bus ID: 0000, Tag=00

>> Address = fdaff030

>> (40000001, 0000000f, fdaff030)

<< (Read Rq) Type = 0, fmt=1, length=128

<< Bus ID: 0100, Tag=01

<< Address = 0000000000c1f000

<< (20000080, 010001ff, 00000000, 00c1f000)

>> (Completion) Type = 10, fmt=2, length=16

>> Bus ID: 0000, Tag=01

>> Completion low addr=0, byte count=512

>> (4a000010, 00000200, 01000100)

>> (Completion) Type = 10, fmt=2, length=16

>> Bus ID: 0000, Tag=01

>> Completion low addr=64, byte count=448

>> (4a000010, 000001c0, 01000140)

>> (Completion) Type = 10, fmt=2, length=16

>> Bus ID: 0000, Tag=01

>> Completion low addr=0, byte count=384

>> (4a000010, 00000180, 01000100)

>> (Completion) Type = 10, fmt=2, length=16

>> Bus ID: 0000, Tag=01

>> Completion low addr=64, byte count=320

>> (4a000010, 00000140, 01000140)

<< (Write) Type = 0, fmt=3, length=32

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c1b000

<< (60000020, 010000ff, 00000000, 00c1b000)

>> (Completion) Type = 10, fmt=2, length=16

>> Bus ID: 0000, Tag=01

>> Completion low addr=0, byte count=256

>> (4a000010, 00000100, 01000100)

>> (Completion) Type = 10, fmt=2, length=16

>> Bus ID: 0000, Tag=01

>> Completion low addr=64, byte count=192

>> (4a000010, 000000c0, 01000140)

<< (Write) Type = 0, fmt=3, length=16

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c1b080

<< (60000010, 010000ff, 00000000, 00c1b080)

>> (Completion) Type = 10, fmt=2, length=16

>> Bus ID: 0000, Tag=01

>> Completion low addr=0, byte count=128

>> (4a000010, 00000080, 01000100)

>> (Completion) Type = 10, fmt=2, length=16

>> Bus ID: 0000, Tag=01

>> Completion low addr=64, byte count=64

>> (4a000010, 00000040, 01000140)

<< (Write) Type = 0, fmt=3, length=32

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c1c000

<< (60000020, 010000ff, 00000000, 00c1c000)

<< (Read Rq) Type = 0, fmt=1, length=128

<< Bus ID: 0100, Tag=02

<< Address = 0000000000c1f200

<< (20000080, 010002ff, 00000000, 00c1f200)

<< (Write) Type = 0, fmt=3, length=32

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c1f400

<< (60000020, 010000ff, 00000000, 00c1f400)

<< (Write) Type = 0, fmt=3, length=2

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c29200

<< (60000002, 010000ff, 00000000, 00c29200)

<< (Write) Type = 0, fmt=3, length=16

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c1c100

<< (60000010, 010000ff, 00000000, 00c1c100)

>> (Completion) Type = 10, fmt=2, length=16

>> Bus ID: 0000, Tag=02

>> Completion low addr=0, byte count=512

>> (4a000010, 00000200, 01000200)

>> (Completion) Type = 10, fmt=2, length=16

>> Bus ID: 0000, Tag=02

>> Completion low addr=64, byte count=448

>> (4a000010, 000001c0, 01000240)

>> (Completion) Type = 10, fmt=2, length=16

>> Bus ID: 0000, Tag=02

>> Completion low addr=0, byte count=384

>> (4a000010, 00000180, 01000200)

<< (Write) Type = 0, fmt=3, length=32

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c1c140

<< (60000020, 010000ff, 00000000, 00c1c140)

>> (Completion) Type = 10, fmt=2, length=16

>> Bus ID: 0000, Tag=02

>> Completion low addr=64, byte count=320

>> (4a000010, 00000140, 01000240)

>> (Completion) Type = 10, fmt=2, length=16

>> Bus ID: 0000, Tag=02

>> Completion low addr=0, byte count=256

>> (4a000010, 00000100, 01000200)

<< (Write) Type = 0, fmt=3, length=16

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c1c1c0

<< (60000010, 010000ff, 00000000, 00c1c1c0)

>> (Completion) Type = 10, fmt=2, length=16

>> Bus ID: 0000, Tag=02

>> Completion low addr=64, byte count=192

>> (4a000010, 000000c0, 01000240)

>> (Completion) Type = 10, fmt=2, length=16

>> Bus ID: 0000, Tag=02

>> Completion low addr=0, byte count=128

>> (4a000010, 00000080, 01000200)

<< (Write) Type = 0, fmt=3, length=32

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c1c200

<< (60000020, 010000ff, 00000000, 00c1c200)

>> (Completion) Type = 10, fmt=2, length=16

>> Bus ID: 0000, Tag=02

>> Completion low addr=64, byte count=64

>> (4a000010, 00000040, 01000240)

<< (Write) Type = 0, fmt=3, length=16

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c1c280

<< (60000010, 010000ff, 00000000, 00c1c280)

<< (Write) Type = 0, fmt=3, length=32

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c1c2c0

<< (60000020, 010000ff, 00000000, 00c1c2c0)

>> (Write) Type = 0, fmt=2, length=1

>> Bus ID: 0000, Tag=00

>> Address = fdaff008

>> (40000001, 0000000f, fdaff008)

<< (Write) Type = 0, fmt=3, length=2

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c29200

<< (60000002, 010000ff, 00000000, 00c29200)

>> (Write) Type = 0, fmt=2, length=1

>> Bus ID: 0000, Tag=00

>> Address = fdaff008

>> (40000001, 0000000f, fdaff008)

>> (Write) Type = 0, fmt=2, length=1

>> Bus ID: 0000, Tag=00

>> Address = fdaff030

>> (40000001, 0000000f, fdaff030)

>> (Write) Type = 0, fmt=2, length=1

>> Bus ID: 0000, Tag=00

>> Address = fdaff034

>> (40000001, 0000000f, fdaff034)

>> (Write) Type = 0, fmt=2, length=1

>> Bus ID: 0000, Tag=00

>> Address = fdaff030

>> (40000001, 0000000f, fdaff030)

<< (Read Rq) Type = 0, fmt=1, length=128

<< Bus ID: 0100, Tag=03

<< Address = 0000000000c20000

<< (20000080, 010003ff, 00000000, 00c20000)

>> (Completion) Type = 10, fmt=2, length=16

>> Bus ID: 0000, Tag=03

>> Completion low addr=0, byte count=512

>> (4a000010, 00000200, 01000300)

>> (Completion) Type = 10, fmt=2, length=16

>> Bus ID: 0000, Tag=03

>> Completion low addr=64, byte count=448

>> (4a000010, 000001c0, 01000340)

>> (Completion) Type = 10, fmt=2, length=16

>> Bus ID: 0000, Tag=03

>> Completion low addr=0, byte count=384

>> (4a000010, 00000180, 01000300)

<< (Write) Type = 0, fmt=3, length=32

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c1c340

<< (60000020, 010000ff, 00000000, 00c1c340)

>> (Completion) Type = 10, fmt=2, length=16

>> Bus ID: 0000, Tag=03

>> Completion low addr=64, byte count=320

>> (4a000010, 00000140, 01000340)

>> (Completion) Type = 10, fmt=2, length=16

>> Bus ID: 0000, Tag=03

>> Completion low addr=0, byte count=256

>> (4a000010, 00000100, 01000300)

>> (Completion) Type = 10, fmt=2, length=16

>> Bus ID: 0000, Tag=03

>> Completion low addr=64, byte count=192

>> (4a000010, 000000c0, 01000340)

<< (Write) Type = 0, fmt=3, length=32

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c1c3c0

<< (60000020, 010000ff, 00000000, 00c1c3c0)

>> (Completion) Type = 10, fmt=2, length=16

>> Bus ID: 0000, Tag=03

>> Completion low addr=0, byte count=128

>> (4a000010, 00000080, 01000300)

>> (Completion) Type = 10, fmt=2, length=16

>> Bus ID: 0000, Tag=03

>> Completion low addr=64, byte count=64

>> (4a000010, 00000040, 01000340)

<< (Write) Type = 0, fmt=3, length=16

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c1c440

<< (60000010, 010000ff, 00000000, 00c1c440)

<< (Read Rq) Type = 0, fmt=1, length=128

<< Bus ID: 0100, Tag=04

<< Address = 0000000000c20200

<< (20000080, 010004ff, 00000000, 00c20200)

<< (Write) Type = 0, fmt=3, length=32

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c1c480

<< (60000020, 010000ff, 00000000, 00c1c480)

<< (Write) Type = 0, fmt=3, length=16

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c1c500

<< (60000010, 010000ff, 00000000, 00c1c500)

>> (Completion) Type = 10, fmt=2, length=16

>> Bus ID: 0000, Tag=04

>> Completion low addr=0, byte count=512

>> (4a000010, 00000200, 01000400)

>> (Completion) Type = 10, fmt=2, length=16

>> Bus ID: 0000, Tag=04

>> Completion low addr=64, byte count=448

>> (4a000010, 000001c0, 01000440)

>> (Completion) Type = 10, fmt=2, length=16

>> Bus ID: 0000, Tag=04

>> Completion low addr=0, byte count=384

>> (4a000010, 00000180, 01000400)

<< (Write) Type = 0, fmt=3, length=32

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c1c540

<< (60000020, 010000ff, 00000000, 00c1c540)

>> (Completion) Type = 10, fmt=2, length=16

>> Bus ID: 0000, Tag=04

>> Completion low addr=64, byte count=320

>> (4a000010, 00000140, 01000440)

>> (Completion) Type = 10, fmt=2, length=16

>> Bus ID: 0000, Tag=04

>> Completion low addr=0, byte count=256

>> (4a000010, 00000100, 01000400)

<< (Write) Type = 0, fmt=3, length=32

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c1c5c0

<< (60000020, 010000ff, 00000000, 00c1c5c0)

>> (Completion) Type = 10, fmt=2, length=16

>> Bus ID: 0000, Tag=04

>> Completion low addr=64, byte count=192

>> (4a000010, 000000c0, 01000440)

>> (Completion) Type = 10, fmt=2, length=16

>> Bus ID: 0000, Tag=04

>> Completion low addr=0, byte count=128

>> (4a000010, 00000080, 01000400)

>> (Completion) Type = 10, fmt=2, length=16

>> Bus ID: 0000, Tag=04

>> Completion low addr=64, byte count=64

>> (4a000010, 00000040, 01000440)

<< (Write) Type = 0, fmt=3, length=32

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c1c640

<< (60000020, 010000ff, 00000000, 00c1c640)

<< (Write) Type = 0, fmt=3, length=16

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c1c6c0

<< (60000010, 010000ff, 00000000, 00c1c6c0)

<< (Write) Type = 0, fmt=3, length=16

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c1c700

<< (60000010, 010000ff, 00000000, 00c1c700)

<< (Write) Type = 0, fmt=3, length=2

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c29200

<< (60000002, 010000ff, 00000000, 00c29200)

>> (Write) Type = 0, fmt=2, length=1

>> Bus ID: 0000, Tag=00

>> Address = fdaff008

>> (40000001, 0000000f, fdaff008)

>> (Write) Type = 0, fmt=2, length=1

>> Bus ID: 0000, Tag=00

>> Address = fdaff034

>> (40000001, 0000000f, fdaff034)

>> (Write) Type = 0, fmt=2, length=1

>> Bus ID: 0000, Tag=00

>> Address = fdaff030

>> (40000001, 0000000f, fdaff030)

<< (Read Rq) Type = 0, fmt=1, length=128

<< Bus ID: 0100, Tag=05

<< Address = 0000000000c21000

<< (20000080, 010005ff, 00000000, 00c21000)

>> (Completion) Type = 10, fmt=2, length=16

>> Bus ID: 0000, Tag=05

>> Completion low addr=0, byte count=512

>> (4a000010, 00000200, 01000500)

>> (Completion) Type = 10, fmt=2, length=16

>> Bus ID: 0000, Tag=05

>> Completion low addr=64, byte count=448

>> (4a000010, 000001c0, 01000540)

>> (Completion) Type = 10, fmt=2, length=16

>> Bus ID: 0000, Tag=05

>> Completion low addr=0, byte count=384

>> (4a000010, 00000180, 01000500)

<< (Write) Type = 0, fmt=3, length=32

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c1c740

<< (60000020, 010000ff, 00000000, 00c1c740)

>> (Completion) Type = 10, fmt=2, length=16

>> Bus ID: 0000, Tag=05

>> Completion low addr=64, byte count=320

>> (4a000010, 00000140, 01000540)

>> (Completion) Type = 10, fmt=2, length=16

>> Bus ID: 0000, Tag=05

>> Completion low addr=0, byte count=256

>> (4a000010, 00000100, 01000500)

>> (Completion) Type = 10, fmt=2, length=16

>> Bus ID: 0000, Tag=05

>> Completion low addr=64, byte count=192

>> (4a000010, 000000c0, 01000540)

<< (Write) Type = 0, fmt=3, length=32

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c1c7c0

<< (60000020, 010000ff, 00000000, 00c1c7c0)

>> (Completion) Type = 10, fmt=2, length=16

>> Bus ID: 0000, Tag=05

>> Completion low addr=0, byte count=128

>> (4a000010, 00000080, 01000500)

>> (Completion) Type = 10, fmt=2, length=16

>> Bus ID: 0000, Tag=05

>> Completion low addr=64, byte count=64

>> (4a000010, 00000040, 01000540)

<< (Write) Type = 0, fmt=3, length=16

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c1c840

<< (60000010, 010000ff, 00000000, 00c1c840)

<< (Read Rq) Type = 0, fmt=1, length=128

<< Bus ID: 0100, Tag=06

<< Address = 0000000000c21200

<< (20000080, 010006ff, 00000000, 00c21200)

<< (Write) Type = 0, fmt=3, length=32

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c1c880

<< (60000020, 010000ff, 00000000, 00c1c880)

<< (Write) Type = 0, fmt=3, length=16

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c1c900

<< (60000010, 010000ff, 00000000, 00c1c900)

>> (Completion) Type = 10, fmt=2, length=16

>> Bus ID: 0000, Tag=06

>> Completion low addr=0, byte count=512

>> (4a000010, 00000200, 01000600)

>> (Completion) Type = 10, fmt=2, length=16

>> Bus ID: 0000, Tag=06

>> Completion low addr=64, byte count=448

>> (4a000010, 000001c0, 01000640)

>> (Completion) Type = 10, fmt=2, length=16

>> Bus ID: 0000, Tag=06

>> Completion low addr=0, byte count=384

>> (4a000010, 00000180, 01000600)

<< (Write) Type = 0, fmt=3, length=32

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c1c940

<< (60000020, 010000ff, 00000000, 00c1c940)

>> (Completion) Type = 10, fmt=2, length=16

>> Bus ID: 0000, Tag=06

>> Completion low addr=64, byte count=320

>> (4a000010, 00000140, 01000640)

>> (Completion) Type = 10, fmt=2, length=16

>> Bus ID: 0000, Tag=06

>> Completion low addr=0, byte count=256

>> (4a000010, 00000100, 01000600)

<< (Write) Type = 0, fmt=3, length=32

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c1c9c0

<< (60000020, 010000ff, 00000000, 00c1c9c0)

>> (Completion) Type = 10, fmt=2, length=16

>> Bus ID: 0000, Tag=06

>> Completion low addr=64, byte count=192

>> (4a000010, 000000c0, 01000640)

>> (Completion) Type = 10, fmt=2, length=16

>> Bus ID: 0000, Tag=06

>> Completion low addr=0, byte count=128

>> (4a000010, 00000080, 01000600)

>> (Completion) Type = 10, fmt=2, length=16

>> Bus ID: 0000, Tag=06

>> Completion low addr=64, byte count=64

>> (4a000010, 00000040, 01000640)

<< (Write) Type = 0, fmt=3, length=32

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c1ca40

<< (60000020, 010000ff, 00000000, 00c1ca40)

<< (Write) Type = 0, fmt=3, length=16

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c1cac0

<< (60000010, 010000ff, 00000000, 00c1cac0)

<< (Write) Type = 0, fmt=3, length=16

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c1cb00

<< (60000010, 010000ff, 00000000, 00c1cb00)

<< (Write) Type = 0, fmt=3, length=2

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c29200

<< (60000002, 010000ff, 00000000, 00c29200)

>> (Write) Type = 0, fmt=2, length=1

>> Bus ID: 0000, Tag=00

>> Address = fdaff008

>> (40000001, 0000000f, fdaff008)

>> (Write) Type = 0, fmt=2, length=1

>> Bus ID: 0000, Tag=00

>> Address = fdaff034

>> (40000001, 0000000f, fdaff034)

>> (Write) Type = 0, fmt=2, length=1

>> Bus ID: 0000, Tag=00

>> Address = fdaff030

>> (40000001, 0000000f, fdaff030)

<< (Read Rq) Type = 0, fmt=1, length=128

<< Bus ID: 0100, Tag=07

<< Address = 0000000000c22000

<< (20000080, 010007ff, 00000000, 00c22000)

>> (Completion) Type = 10, fmt=2, length=16

>> Bus ID: 0000, Tag=07

>> Completion low addr=0, byte count=512

>> (4a000010, 00000200, 01000700)

>> (Completion) Type = 10, fmt=2, length=16

>> Bus ID: 0000, Tag=07

>> Completion low addr=64, byte count=448

>> (4a000010, 000001c0, 01000740)

>> (Completion) Type = 10, fmt=2, length=16

>> Bus ID: 0000, Tag=07

>> Completion low addr=0, byte count=384

>> (4a000010, 00000180, 01000700)

>> (Completion) Type = 10, fmt=2, length=16

>> Bus ID: 0000, Tag=07

>> Completion low addr=64, byte count=320

>> (4a000010, 00000140, 01000740)

<< (Write) Type = 0, fmt=3, length=32

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c1cb40

<< (60000020, 010000ff, 00000000, 00c1cb40)

>> (Completion) Type = 10, fmt=2, length=16

>> Bus ID: 0000, Tag=07

>> Completion low addr=0, byte count=256

>> (4a000010, 00000100, 01000700)

>> (Completion) Type = 10, fmt=2, length=16

>> Bus ID: 0000, Tag=07

>> Completion low addr=64, byte count=192

>> (4a000010, 000000c0, 01000740)

<< (Write) Type = 0, fmt=3, length=16

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c1cbc0

<< (60000010, 010000ff, 00000000, 00c1cbc0)

>> (Completion) Type = 10, fmt=2, length=16

>> Bus ID: 0000, Tag=07

>> Completion low addr=0, byte count=128

>> (4a000010, 00000080, 01000700)

>> (Completion) Type = 10, fmt=2, length=16

>> Bus ID: 0000, Tag=07

>> Completion low addr=64, byte count=64

>> (4a000010, 00000040, 01000740)

<< (Write) Type = 0, fmt=3, length=32

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c1cc00

<< (60000020, 010000ff, 00000000, 00c1cc00)

<< (Read Rq) Type = 0, fmt=1, length=128

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c22200

<< (20000080, 010000ff, 00000000, 00c22200)

<< (Write) Type = 0, fmt=3, length=32

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c22400

<< (60000020, 010000ff, 00000000, 00c22400)

<< (Write) Type = 0, fmt=3, length=16

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c1cd00

<< (60000010, 010000ff, 00000000, 00c1cd00)

>> (Completion) Type = 10, fmt=2, length=16

>> Bus ID: 0000, Tag=00

>> Completion low addr=0, byte count=512

>> (4a000010, 00000200, 01000000)

>> (Completion) Type = 10, fmt=2, length=16

>> Bus ID: 0000, Tag=00

>> Completion low addr=64, byte count=448

>> (4a000010, 000001c0, 01000040)

<< (Write) Type = 0, fmt=3, length=32

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c13000

<< (60000020, 010000ff, 00000000, 00c13000)

<< (Write) Type = 0, fmt=3, length=32

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c13080

<< (60000020, 010000ff, 00000000, 00c13080)

<< (Write) Type = 0, fmt=3, length=32

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c13100

<< (60000020, 010000ff, 00000000, 00c13100)

<< (Write) Type = 0, fmt=3, length=32

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c13180

<< (60000020, 010000ff, 00000000, 00c13180)

<< (Write) Type = 0, fmt=3, length=32

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c13200

<< (60000020, 010000ff, 00000000, 00c13200)

<< (Write) Type = 0, fmt=3, length=32

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c13280

<< (60000020, 010000ff, 00000000, 00c13280)

<< (Write) Type = 0, fmt=3, length=32

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c13300

<< (60000020, 010000ff, 00000000, 00c13300)

<< (Write) Type = 0, fmt=3, length=32

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c13380

<< (60000020, 010000ff, 00000000, 00c13380)

<< (Write) Type = 0, fmt=3, length=32

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c13400

<< (60000020, 010000ff, 00000000, 00c13400)

<< (Write) Type = 0, fmt=3, length=32

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c13480

<< (60000020, 010000ff, 00000000, 00c13480)

<< (Write) Type = 0, fmt=3, length=32

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c13500

<< (60000020, 010000ff, 00000000, 00c13500)

<< (Write) Type = 0, fmt=3, length=32

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c13580

<< (60000020, 010000ff, 00000000, 00c13580)

<< (Write) Type = 0, fmt=3, length=32

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c13600

<< (60000020, 010000ff, 00000000, 00c13600)

<< (Write) Type = 0, fmt=3, length=32

<< Bus ID: 0100, Tag=00

<< Address = 0000000000c13680

<< (60000020, 010000ff, 00000000, 00c13680)

(and this is where the sniffer's memory got full)